Online entertainment is getting more and more advanced. We’ve come up with just about anything from video games to robots. But the one thing you may not yet know about is the fact that more kids are spending time with online robots instead of their human friends. In today’s GKIS article, we’re doing a deep dive into Character.AI, a popular website that lets subscribers virtually date a robot. We’ll go over how it’s being used, the dangers of it, and our thoughts on the site. Before letting your child use just any new and popular app, we recommend our Screen Safety Essentials Course for info on how to navigate the internet safely for the whole family.

Artificial Intelligence and Bots

Before we dive deep into the world of C.AI, we’ll want to go over some key terms.

- Artificial Intelligence refers to the capability of computer systems or algorithms to imitate intelligent human behavior.[1]

- A bot is a computer program or character (as in a game) designed to mimic the actions of a person.[2] A bot is a form of artificial intelligence.

- NSFW refers to “not safe (or suitable) for work.” NSFW is used to warn someone that a website, image, message, etc., is not suitable for viewing at most places of employment.[3]

What is C.AI?

Character.AI is a website made by Noam Shazeer and Daniel De Freitas that allows users to chat with bots. The C.AI website launched in September 2022, and the app was released in May 2023. In its first week of being launched, the app got 1.7 million downloads. [4]

C.AI uses artificial intelligence to let you create characters and talk to them. You can create original characters, base yours off a character from a TV show or movie, or base your character off a real person.

C.AI became popular when teens started showing their conversations with the C.AI bots on TikTok. Many teens showed romantic and sensual conversations they had with their bots. Week after week, teens all over the world began to fall in love with their new artificial friends.

How Teens Are Using C.AI

Users create a free account, and then choose from a list of characters to talk to or make their own. Users can talk about whatever they want with the bot, and it will reply with human-like responses. Pre-made characters have their own set personality that users cannot change.

To make their own custom bot, users choose a name for their character and then upload an image to give the bot a ‘face.’ Users can talk with the bot about any topic. When the bot responds, users rate the bot’s responses with 1-5 stars. After some time, the bot will use the user’s ratings to figure out what personality they want it to have.

Users can make their bots private only for them or public for anyone to use. However, all chats between a person and a bot are private.

The Dangerous Side of C.AI

Using these bots may seem like a fun idea for kids, but there are a lot of risks that come with them.

Data Storage

A major risk is that C.AI stores the information and texts you share with the character bots.

C.AI claims that no real person reads this information. However, this is still dangerous for privacy reasons. If the website or app were hacked, hackers can do whatever they want with users’ information. This puts all users at major risk when using the site.

No Age Verification and Exposing Minors to NSFW Content

C.AI encourages its users to be 13 years old or older, but there is no age verification within the site or app.[5] This means users can lie about their age to use C.AI.

C.AI claims to not allow sexual conversation between users and bots, but users can bypass this. Users can misspell certain words or add extra spaces to words to bypass the NSFW filter. The bot knows what word you’re trying to say, so it will reply with NSFW responses. Users can have detailed sexual conversations with the bots. The dangerous part of this is that many of C.AI’s users are minors.

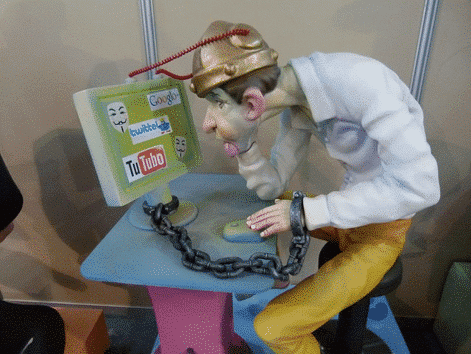

Effects on Children’s Relationships

Users can speak romantically with the bots, and the bots will respond with romantic messages. The more kids use these bots, the higher chance they have of becoming dependent on them. Children’s brains are impressionable, and they absorb information quickly. Some kids may prefer to engage in these fake relationships instead of relationships with real people.

Using these bots could also create social anxiety. Users know what to expect when talking with a bot since the bot’s personality is pre-set. However, real people in the outside world are unpredictable. The uncertainty of real conversations could make users shy, anxious, and avoidant, especially if they replace real-life challenging practices with safe and easy online practices.

Other risks include:

- Disappointment in real-life relationships with others

- Depression

- Isolation

- Loss of social skills

GKIS Thoughts On C.AI

GKIS rates C.AI as a red-light website. This means it is not recommended for children under the age of 18 to use. We came to this conclusion because it lacks age verification and exposes minors to NSFW content. However, it could be slightly safer if parents monitor their children’s interactions with the bots. If you’re worried about what other dangerous sites your child may be visiting, consider checking out our article on red-light websites.

GKIS encourages parents to talk to their children about what topics are safe to discuss if they use C.AI. Before making a decision to use the site, we recommend checking out the GKIS Social Media Readiness Training course. It helps teens and tweens learn the red flags of social media and teaches them valuable psychological wellness skills.

Thanks to CSUCI intern Samantha Sanchez for researching Character.AI and preparing this article.

I’m the mom psychologist who will help you GetKidsInternetSafe.

Onward to More Awesome Parenting,

Dr. Tracy S. Bennett, Ph.D.

Mom, Clinical Psychologist, CSUCI Adjunct Faculty

GetKidsInternetSafe.com

Works Cited

[1] Artificial Intelligence – Merriam Webster

[4] Character.AI

Photo Credits

Pete Linforth via Pixabay https://pixabay.com/illustrations/connection-love-modern-kiss-human-4848255/

Samantha Sanchez (Image #2)

Adrian Swancar via Unsplash https://unsplash.com/photos/JXXdS4gbCTI

Research opposing views.

Research opposing views.